Best Settings For Acer Monitor

Disappointed by your monitor's image quality? You might be able to amend it through monitor calibration. Learning to calibrate your monitor will brand the almost of its potential, and while you lot can buy expensive tools for this job, you can frequently achieve a noticeable improvement without them.

This guide volition explain how to calibrate your monitor, step past pace.

How to first monitor calibration in Windows x

Windows and MacOS have very basic born calibration utilities. They're express and won't assistance yous understand how your monitor works, merely they're a good place to start.

Here'south how to start calibrating a monitor on Windows.

- Apply Windows Search to search for brandish scale.

- Select Calibrate display color from the results.

- Follow the on-screen instructions.

Hither'due south how to start calibrating a monitor on MacOS.

- Open System Settings.

- Select Displays.

- Open the Colour tab in the Displays card.

- Tap Calibrate.

- Follow the on-screen instructions.

Taking the next pace

The scale utilities in Windows 10 and MacOS are just a beginning. They will assistance you work out serious issues with your calibration, like an incorrect contrast setting or wildly terrible display gamma value. They're more focused on providing a usable image than an enjoyable one, however. You tin can do more.

Before we get started, let's bust a popular myth about calibration: there is no such thing every bit a perfect monitor or a perfect calibration. Image quality is subjective and, for most people, the goal of calibration should be improving perceived quality on the monitor you own.

With that said, a variety of standards exist. Each provides a prepare of values everyone can target. Dozens, mayhap hundreds of standards be, merely sRGB is the standard most mutual to computers. Other common standards include:

- DCI-P3, which was created for the professional motion-picture show industry. Many "professional" computer monitors target DCI-P3, and Apple targets DCI-P3 in its latest Mac computers, likewise.

- Adobe RGB, created by Adobe in the late 1990s to provide a standard for its professional software, including Photoshop.

- 709, a standard created for high-definition tv set.

Y'all don't need to target these standards. In fact, precisely targeting a standard is impossible without a scale tool. Yet, you'll want to be aware of these standards as yous calibrate your monitor considering they'll impact how certain monitor settings piece of work. Also, many monitors have settings meant to target them.

How to calibrate resolution and scaling

What you demand to know: Your calculator's display resolution should always equal your monitor'south native resolution. If your monitor'southward resolution is college than 1080p, you lot may need to use scaling to brand text readable.

Peradventure it should go without proverb, but information technology's crucial that you select the correct resolution for your monitor. Windows and MacOS typically select the correct resolution by default, merely in that location'due south always the chance it's wrong.

Both Windows x and MacOS place resolution command in their corresponding Brandish settings menu. The resolution selected should match the native resolution of your monitor, which describes the number of horizontal and vertical pixels physically present on the display. Most monitors will highlight this in their marketing materials and specifications.

Once resolution is set, you should consider scaling. Imagine a button that'southward meant to be displayed at 300 pixels wide and 100 pixels alpine. This push will appear much larger on a 1080p monitor than on a 4K monitor if both monitors are the same size. Why? Considering the pixels on the 1080p monitor are actually larger!

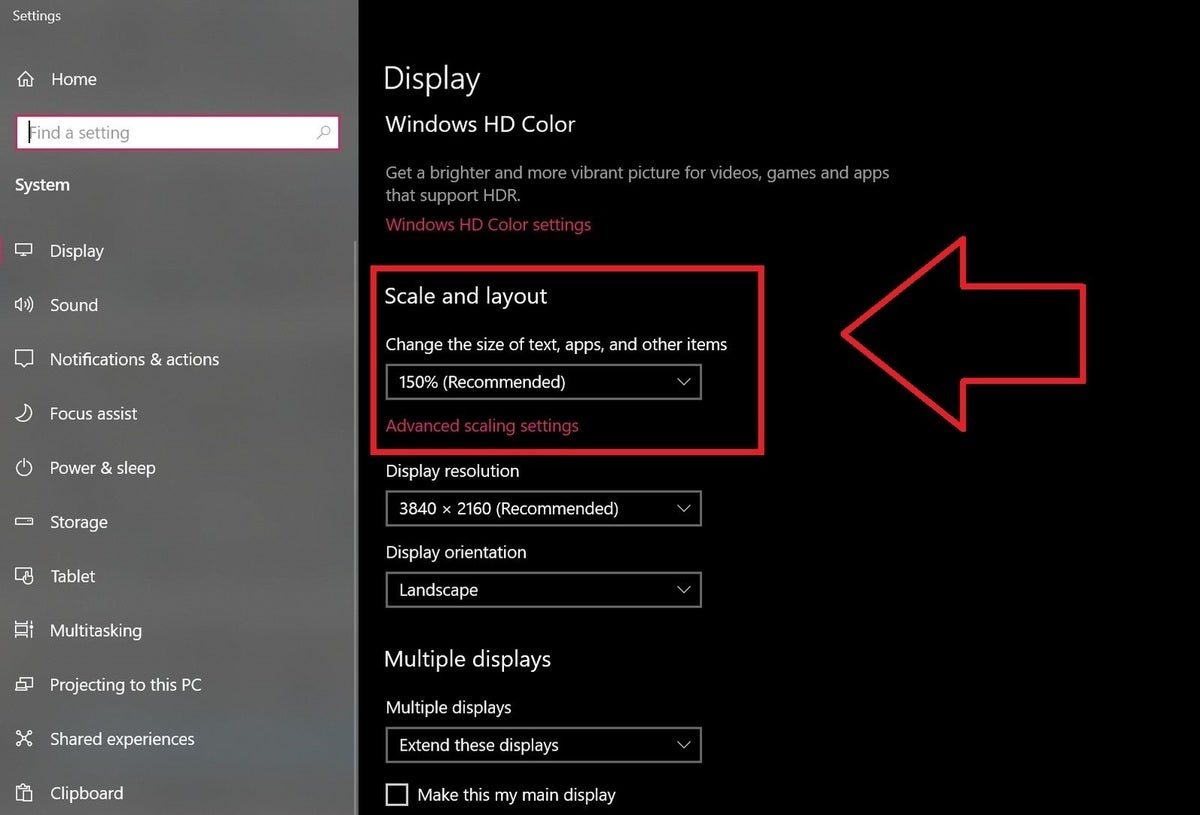

Brad Chacos/IDG

Brad Chacos/IDG Windows 10's resolution scaling option defaults to 150% on a 4K monitor.

Scaling resolves this issue. Over again, Windows and MacOS include a scale setting in their respective Display menus. Windows expresses scale every bit a percentage. A higher percentage scales upwardly content. MacOS instead uses scaled resolution, which is a flake more confusing. You'll change Scaled Resolution to a lower setting to increase the size of the interface.

Dissimilar resolution, which should always exist set to your monitor's native resolution, in that location's no right reply for scaling. It'south a matter of personal preference. Increasing calibration volition reduce the amount of content you tin can see at once, which makes multitasking more than difficult, but can reduce eye strain or potentially neck and dorsum strain (since you won't feel an urge to lean in).

How to calibrate brightness

What yous need to know: Reduce the monitor's effulgence to a setting that remains easy to view only doesn't reduce detail in a dark image. If possible, use a light meter on a smartphone to shoot for a effulgence of about 200 lux.

You may not be shocked to learn that turning brightness up makes your monitor brighter, and turning it downwardly makes information technology less brilliant. Simple enough. But what does this have to practise with calibrating a monitor to amend prototype quality?

Nearly all monitors sold in the final decade accept a backlit LCD display. This means they take a LCD console with a light behind it. The calorie-free shines through the LCD to produce an image (otherwise, information technology'd expect like the Gameboy Color).

Matt Smith/IDG

Matt Smith/IDG Lux Light Meter

It'southward a simple setup that's sparse, lite, energy efficient, and piece of cake to produce, merely there's a downside. Your monitor'southward deepest, darkest black level is directly changed by the monitor's brightness. The higher the brightness, the more than gray, hazy, and unpleasant dark scenes will appear. You'll notice this in movies, which often rely on dark scenes, and in certain PC game genres, similar horror and simulation.

The solution? Turn downward the brightness of your monitor as much as possible without making the image seem dim or more difficult to come across. If you want to get more than precise, you can use a costless light measurement app similar Lux Light Meter. I recommend about 300 lux for most rooms, though y'all might want to dip as low as 200 in a about pitch-black gaming den.

Aside from improving dark scenes and perceived contrast, reducing brightness can reduce eye strain. Viewing a very vivid monitor in a dim room is non pleasant because your eyes must constantly suit to deal with the difference in effulgence between the display and its surroundings.

How to calibrate dissimilarity

What y'all need to know: View the Lagom LCD contrast test paradigm and suit dissimilarity so that all confined on the examination image are visible.

Contrast is the difference between the lowest and highest level of luminance your monitor can display. The maximum difference a monitor can produce is its contrast ratio. Contrast tin can exist improved by increasing the maximum brightness, lowering the darkest possible black level, or both.

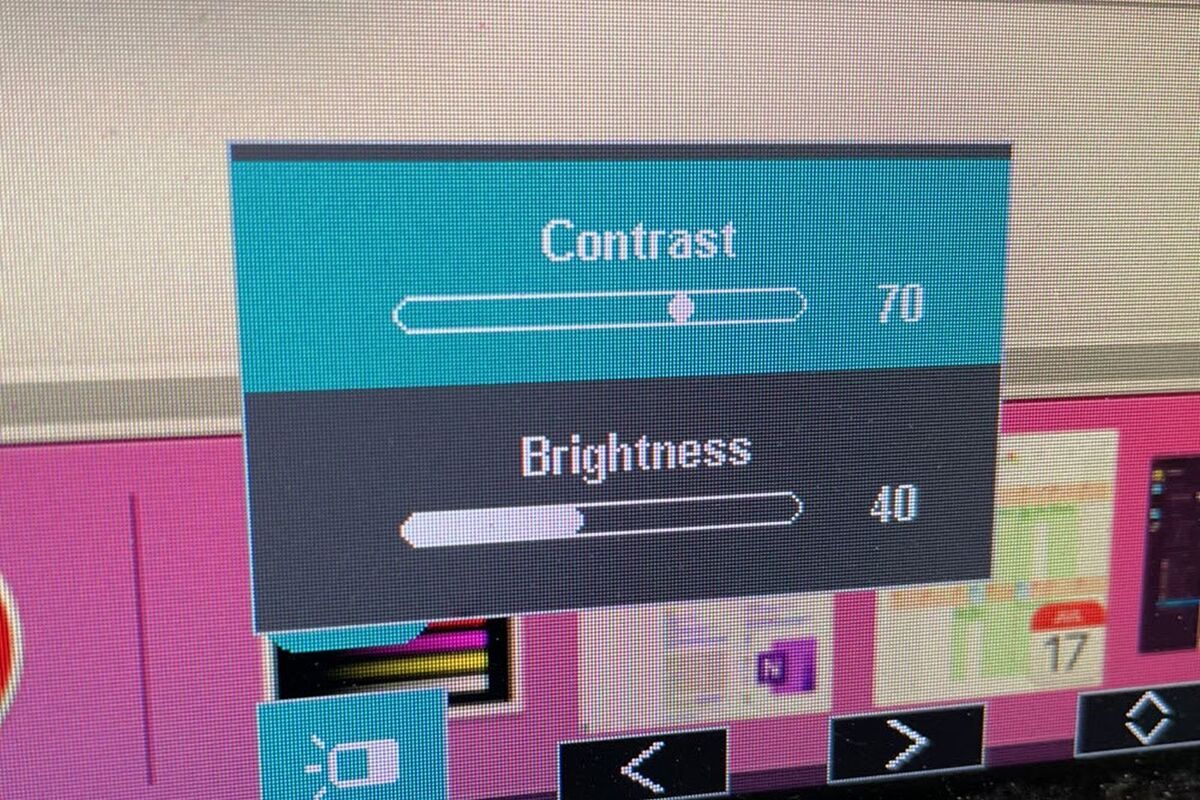

Matt Smith/IDG

Matt Smith/IDG All monitors have a contrast setting, but information technology rarely does what you'd expect. Turning the contrast up to its maximum setting can really reduce the contrast ratio by bumping up the monitor's deepest black level. It also can trounce color and shadow detail.

To calibrate contrast, visit the Lagom LCD contrast test image. An ideal contrast setting will allow yous see all color bars from ane to 32. This can be a real challenge for an LCD monitor, especially on the dark terminate of the image, so you may have to settle for a lack of visible difference in that area.

On the other manus, setting the contrast too loftier will cause colors at the loftier end of the spectrum to drain into 1. This problem is avoidable on a modern LCD monitor past turning downwards the contrast which, in virtually cases, is set to a high level past default.

How to calibrate sharpness

What you need to know: Sharpness is highly subjective, so pick whatsoever setting looks best to yous.

Sharpness is an odd setting. Many monitors permit you change sharpness, only sharpness isn't a technical term. At that place'south no objective measurement for sharpness and it'south not role of standards like sRGB or DCI-P3.

A modify to a monitor'due south sharpness setting changes how the monitor's post-processing handles the image sent to information technology. High sharpness will exaggerate details and contrast betwixt objects. That might audio good, but it can lead to chunky artifacts and make details expect unnatural. Depression sharpness volition blur details and contrast, which tin look more than organic but somewhen leads to a smeared, imprecise quality.

There'southward no right or wrong reply. View a detailed, high-dissimilarity image and flip through your monitor's sharpness settings to decide which appeals virtually to you.

How to calibrate gamma

What you need to know: Visit the Lagom LCD gamma exam image and conform your monitor'south gamma settings until the image indicates a gamma value of ii.2.

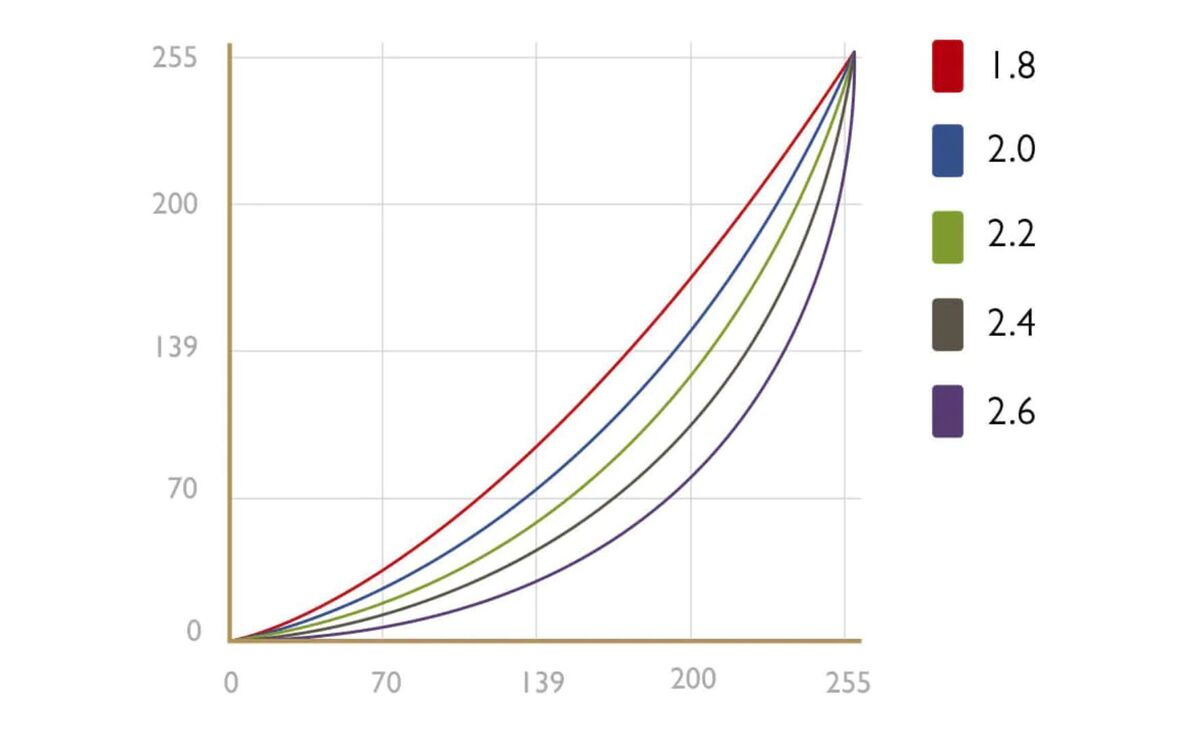

For our purposes, gamma describes how a monitor handles the luminance of an image sent to it. This is chosen display gamma. A loftier gamma value (such every bit 2.vi) will appear deeper and may have more dissimilarity, while a low gamma value (such as ane.8) will appear brighter and may show more detail in dark areas.

At that place'due south no "right" gamma value. However, the sRGB standard settled on a gamma value of 2.2, or something shut to it, as the preferred value. This is a solid accommodating option for a figurer monitor. It's bright plenty to be easy to use merely offers decent detail in darker areas.

BenQ

BenQ Gamma correction.

You need a calibration tool to precisely adapt gamma, but you tin can make improvements using the Lagom LCD gamma exam prototype. Equally its instructions say, you'll want to sit down dorsum from your monitor (nearly v or six feet abroad) and look at the colour bars, each of which is made upwards of several bands. Y'all'll encounter a indicate on each bar where the bands first to blend together. The gamma value indicated where this occurs is your monitor'south approximate gamma value.

If you see the bars alloy around a value of 2.2, congratulations. Your gamma is already in the ballpark. If not, you'll want to make some adjustments. There's several ways to practice this.

Your monitor may include gamma settings in its on-screen control menu. Less expensive monitors will have a selection of vaguely labeled viewing modes, like "function" or "gaming," with their own prebaked settings. You tin can flip through these while viewing the Lagom LCD gamma examination image to see if they meliorate the gamma.

More expensive monitors volition accept precise gamma settings labeled with a gamma value, including a value of 2.2, which is usually ideal. Again, flip through the available settings to find one that appears right while viewing the examination image.

If neither option works, or your monitor but lacks gamma adjustment options, you lot tin can try software that changes the gamma of your brandish. Windows users can use a utility such as QuickGamma. Driver software from AMD and Nvidia as well offering settings to let yous tweak gamma. MacOS users can consider Handy Gamma as a gratuitous option or look at Gamma Control 6 for in-depth options.

How to calibrate color temperature and white point

What you need to know: Colour temperature is controlled past the color temperature or white point setting on your monitor. Look for a value of 6500K if bachelor. Otherwise, open a blank white image or certificate and flip through the available color temperature options. Pick the one that looks best to yous.

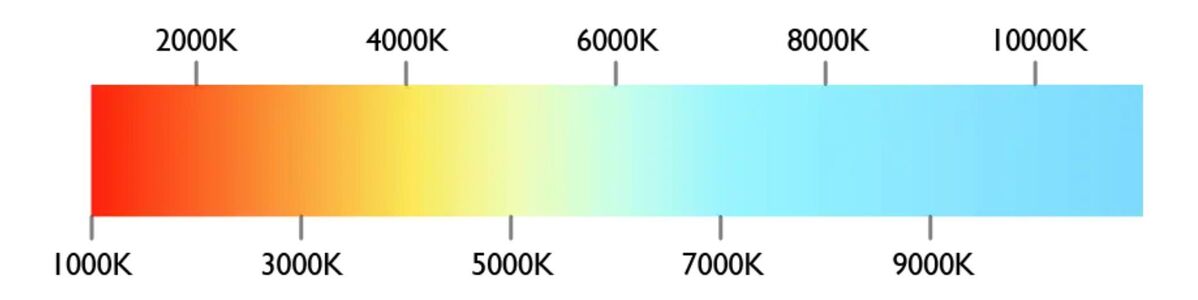

Color temperature describes how the colour of your monitor skews between a "warm" or "cool" character. Lower temperatures provide a warmer expect, which skews towards red and orange, while higher temperatures provide a cooler look, which skews towards blue and cyan. The term white point is oftentimes used interchangeably with color temperature.

Color temperature values are described equally a literal temperature in degrees Kelvin which, bluntly, is pretty weird if you're not familiar with display technology (and notwithstanding a little weird if you lot are). Merely don't worry. Changing your color temperature won't start a firm fire or fifty-fifty warm the room.

BenQ

BenQ Color temperature values.

As with gamma, in that location's no absolute "correct" color temperature. Information technology's even more variable because perceived color temperature can change significantly depending on viewing weather condition. But, also like gamma, nigh image standards take settled on a generally agreed ideal value which, in this case, is a white signal of 6500K.

No test image can aid yous target a specific white point. You lot demand a calibration tool for that. However, well-nigh monitors will have several color temperature settings that yous can flip through in the monitor'southward on-screen menu.

Less expensive monitors volition use vague values, such equally "warm" and "cool," while more than expensive monitors will provide precise colour temperature adjustments, such as "5500K" or "6500K." MacOS includes color temperature adjustment as role of its default display scale.

Outside of standards, colour temperature is rather subjective. A truly out-of-whack gamma value can destroy detail, making dark scenes in movies unwatchable and night levels in games unplayable. Color temperature problems are less severe. Even a very odd white point setting (like, say, 10000K) is usable, though almost people perceive it as having a harsh, clinical look.

So, how do you dial in colour temperature without a calibration tool? I find information technology'due south best to view a blank white screen, such as a new prototype or document, and and so flip through the bachelor color temperature settings. This will help you settle on a setting that fits your preferences.

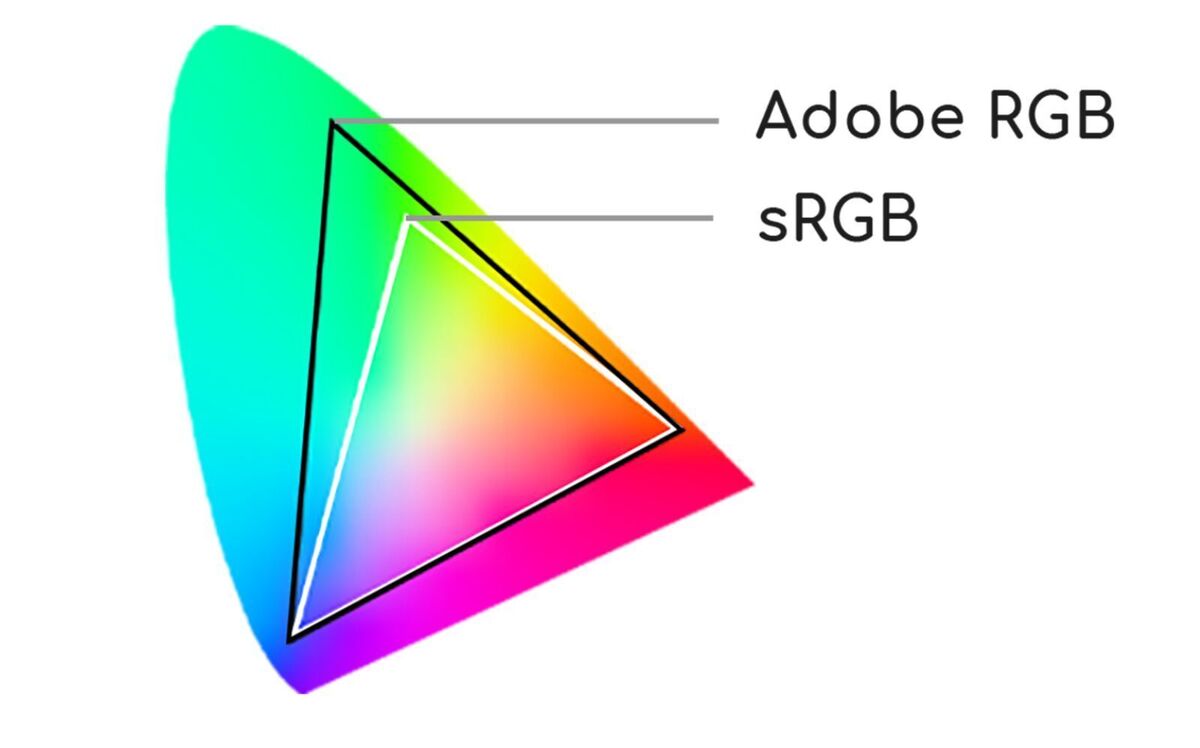

How to calibrate color gamut

What you demand to know: Look for an sRGB way if your monitor doesn't support a broad color gamut, or a DCI-P3 way if your monitor does. This may lock your monitor's brightness to a lower level than you prefer, notwithstanding.

A monitor's color gamut is the range of colors that it tin can display. Even the all-time monitors tin't display every possible colour in the universe. This is not simply because of limitations in monitor applied science but as well limitations in how computers handle colour information.

A color gamut is described in reference to a specific standard like sRGB or DCI-P3. Y'all'll besides run across the term "broad gamut" used past monitors. This means the monitor supports a color gamut wider than the sRGB standard which, relative to other standards, is narrow. Most wide gamut monitors support DCI-P3 and Rec. 709.

Acer

Acer In that location'due south a big problem with color gamut on nigh monitors, however. The colour gamut associated with a standard is often tied to other aspects of the standard you might non adopt, like gamma and effulgence.

Worse, it'due south common for monitors to lock effulgence and gamma controls when you select an sRGB, DCI-P3, or Rec. 709 mode. The theory is that yous shouldn't be able to knock the monitor out of compliance with the standard while in these modes, which makes sense if y'all're working on a Pixar film, merely doesn't brand much sense otherwise.

In the end, colour gamut isn't a very useful role of monitor calibration for nearly people. Try the sRGB or DCI-P3 modes, if bachelor, but be prepared for disappointment if those modes lock your monitor's brightness and gamma.

Well-nigh people can achieve a boost to paradigm quality past calibrating their monitor by middle. The result won't adjust to any standard, but it will exist noticeably different from the settings the monitor shipped with.

If yous want to take calibration to the next level, however, you need a calibration tool. A calibration tool has a sensor that tin can judge whether your monitor's image conforms to accepted standards like sRGB and DCI-P3. This is especially important for color accuracy. There's no mode to estimate colour accuracy with the naked eye.

Datacolor

Datacolor Datacolor'southward SpyderX Pro.

Datacolor's SpyderX Pro is my preferred calibration tool. The SpyderX is extremely fast and simple to use, which is of import, as calibration can become disruptive and time consuming. The SpyderX Pro is great for well-nigh people and priced at a relatively affordable $170. X-Rite'due south i1Display Studio is some other skillful option, though I haven't used the latest model. It's also priced at $170Remove not-product link.

If you do buy a tool, you tin throw about of the advice in this guide out the window. Calibration tools come with software you'll use with the tool and, after calibration, will load a custom display profile.

No, non for most people.

A monitor scale tool has become less important as monitor quality has improved. I've reviewed monitors for over a decade, so I've witnessed this progress offset hand. Today'due south monitors are more than likely than ever to accept adequate contrast, gamma, and color out of the box. Most send at a default brightness that's too high, simply that's an piece of cake fix.

Even content creators may not need a calibration tool. Calibration is frequently considered a must for professionals, merely the definition of professional is non what it used to be. Tens of thousands of self-employed creators brand splendid content without ever touching a calibration tool. These creators don't have to accommodate to any standard aside from what they think looks slap-up. Information technology's true some creators have a reputation for remarkable image quality and slick editing, just most just apply whatever they have at hand.

With that said, some professionals work for employers or clients who require content created to a standard like sRGB, DCI-P3, or Rec. 709. An employer or client may even accept custom standards applicable only to work created for them. The movie industry is an piece of cake case: a film editor working at a studio tin't only turn over footage edited to look however the editor prefers. That's when a calibration tool goes from a luxury to a necessity.

What about HDR?

PC Globe's guide to HDR on your PC goes in-depth on HDR, but there's something you should know equally information technology relates to calibration: y'all can't do much to calibrate a monitor'south HDR style.

Asus

Asus HDR can rock your socks (and sear your eyeballs) but yous tin can't tweak it.

Monitors almost ever rely on the HDR10 standard when displaying HDR, and care for HDR simply similar sRGB or DCI-P3 way. In other words, activating the style will lock the monitor to settings meant to conform to the HDR10 standard, disabling image quality adjustments you might usually use to calibrate the monitor. There's other technical hurdles, likewise, though they're outside the telescopic of this guide.

There'due south no solution for this as of however. Monitors aren't alone in this. Consumer televisions face up similar obstacles.

Scale cheat canvas

Hither'due south a quick summary of what you lot should practise to calibrate a monitor.

- Set the display resolution of Windows or MacOS to the native resolution of your monitor.

- Select a scaling setting that makes small text and interface elements readable.

- Reduce brightness to about 200 lux (using a smartphone light meter for measurement).

- Adjust contrast so that all bars on the Lagom LCD contrast test image are visible.

- Set sharpness to the level you adopt.

- Adjust gamma so that confined on the Lagom LCD gamma test image indicate a gamma value of 2.2.

- Set monitor color temperature (besides known as white point) to 6500K if that setting is available, or change information technology to your preference if it's not.

- Switch to an sRGB mode if your monitor has a standard color gamut, or DCI-P3 if your monitor has a wide color gamut.

- If y'all're willing to spend some cash for better prototype quality, buy a calibration tool like the Datacolor SpyderX Pro or X-Rite i1Display Studio.

These tweaks will amend the image quality of any LCD monitor. The worse the monitor, the more noticeable the alter will probable be. Today's best monitors are good out of the box, but entry-level monitors receive less scrutiny and permit more variance between monitors. Calibration won't brand a budget monitor compete with a flagship, but it can make the difference between a washed-out image quality dumpster fire and a perfectly fine day-to-day display.

Best Settings For Acer Monitor,

Source: https://www.pcworld.com/article/394912/how-to-calibrate-your-monitor.html

Posted by: evansknet1968.blogspot.com

0 Response to "Best Settings For Acer Monitor"

Post a Comment